The Clinical Paradox

From Data Overload to Connected Understanding

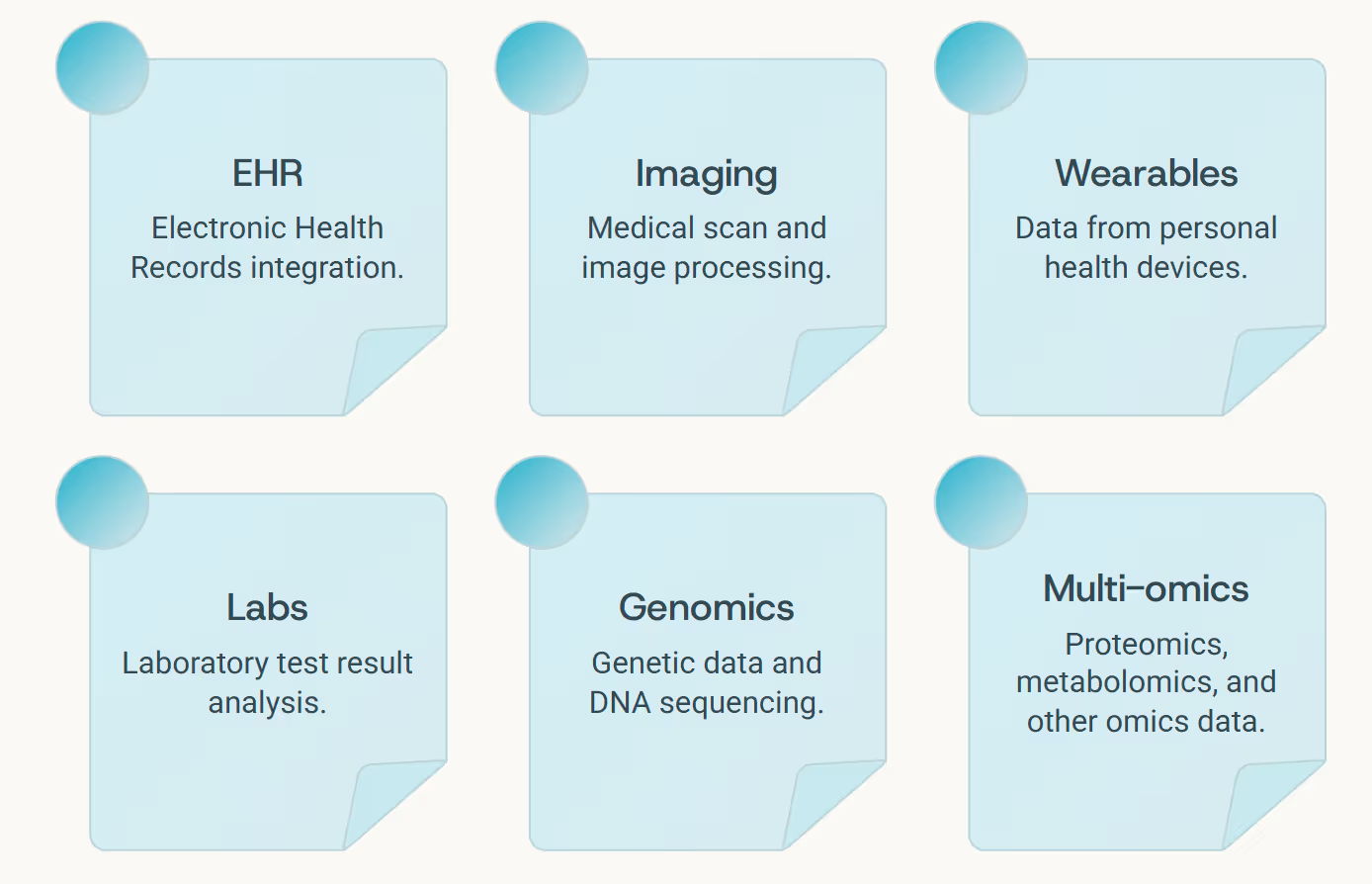

Every modern clinician stands before a paradox - too much information, too little insight. Electronic health records log transactions, not stories. Medical imaging captures anatomical structure but not biological meaning. Wearable devices measure behaviors without interpretation. Lab results arrive as isolated numbers, disconnected from the patient's lived experience.

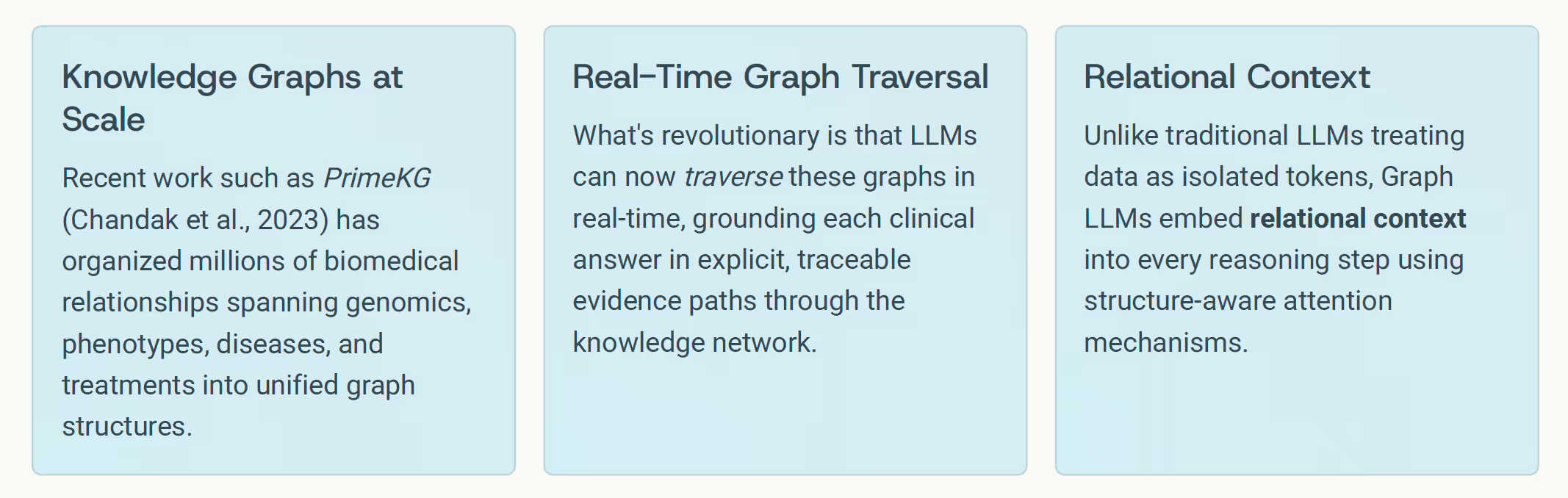

The real frontier in medical AI isn't collecting more data - it's connecting it in ways that reveal true understanding. This is where Graph LLMs change everything: an emerging class of reasoning models that combine language understanding with structural knowledge, designed to think not just about data, but through it.

Think of them as the neural architecture for connected intelligence: models that don't just read medical data, but actively map the terrain of human health, discovering pathways between cause and effect that traditional AI systems miss entirely.

The Graph Advantage

Graph LLMs trace relationships between symptoms, signals, and underlying biology in ways that mirror human clinical reasoning - organizing knowledge as interconnected networks rather than isolated facts.

The Graph Mind of Medicine

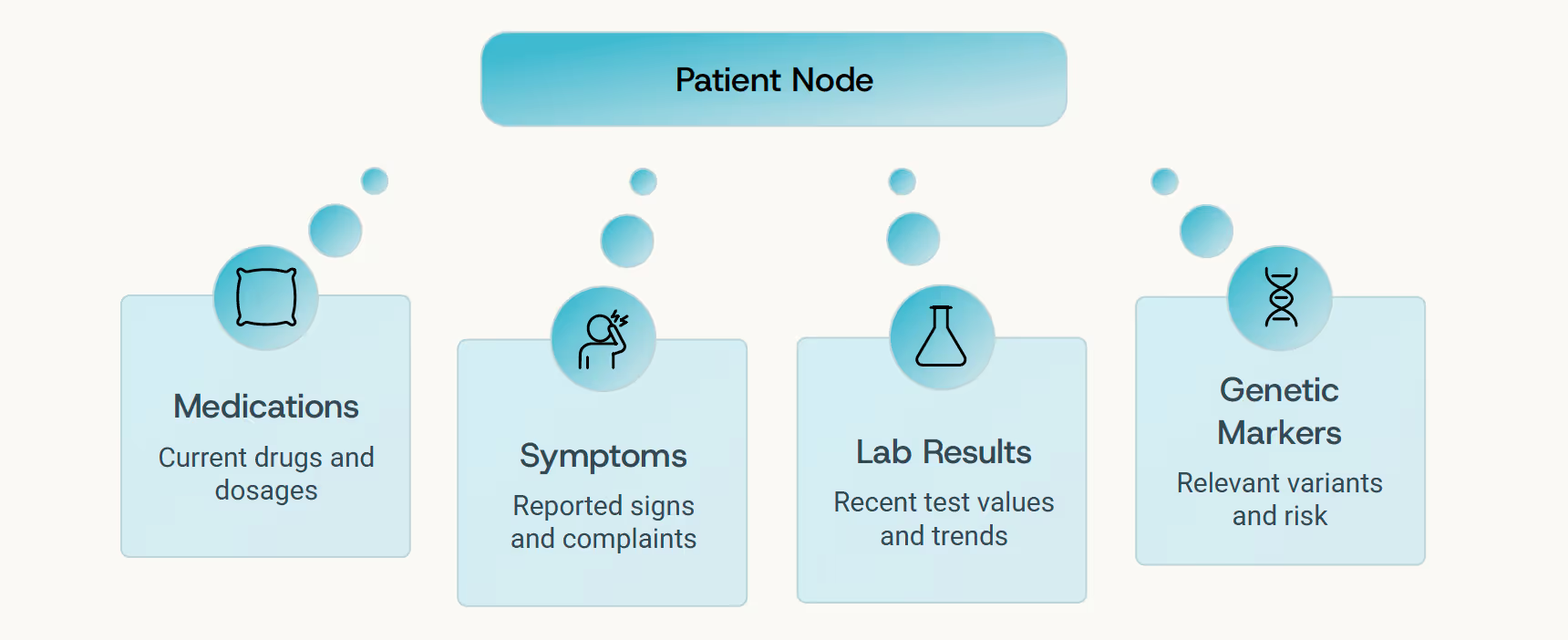

In clinical reasoning, context is everything. A laboratory result becomes meaningful only when understood alongside current medications, lifestyle patterns, genetic predispositions, and medical history. Graph LLMs bring this structural thinking natively into AI: they organize medical knowledge as a network of nodes and edges - patients, genes, lab values, drugs, clinical outcomes - and reason over those connections just as an experienced clinician would.

This is not abstract theory - it's a fundamental shift in how AI systems understand medicine. When a Graph LLM reads a patient chart, it doesn't simply summarize the contents. It navigates the information landscape, mentally connecting symptoms to biological mechanisms, treatments to outcomes, risks to protective factors - creating a living map of clinical understanding.

"Structure-aware reasoning transforms causality from correlation into true mechanistic understanding - the foundation of trustworthy medical AI."

The Temporal Graph: Learning from the Body's Rhythms

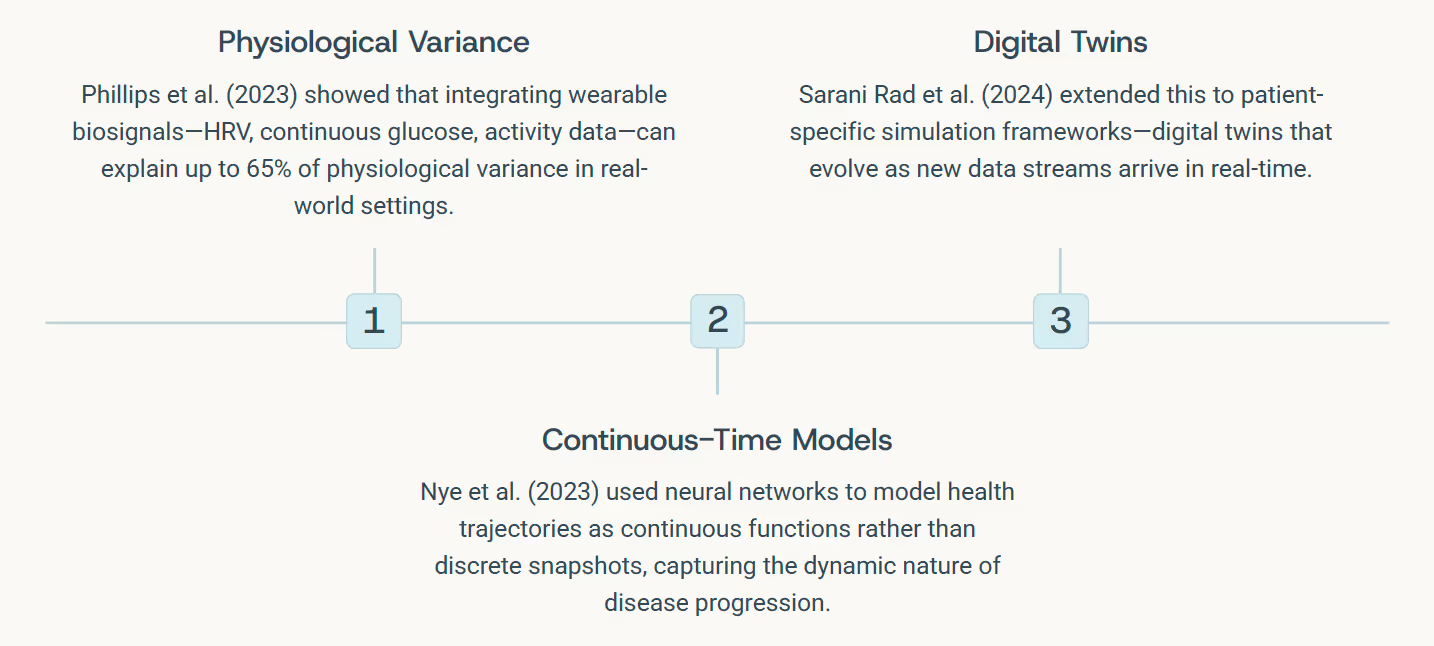

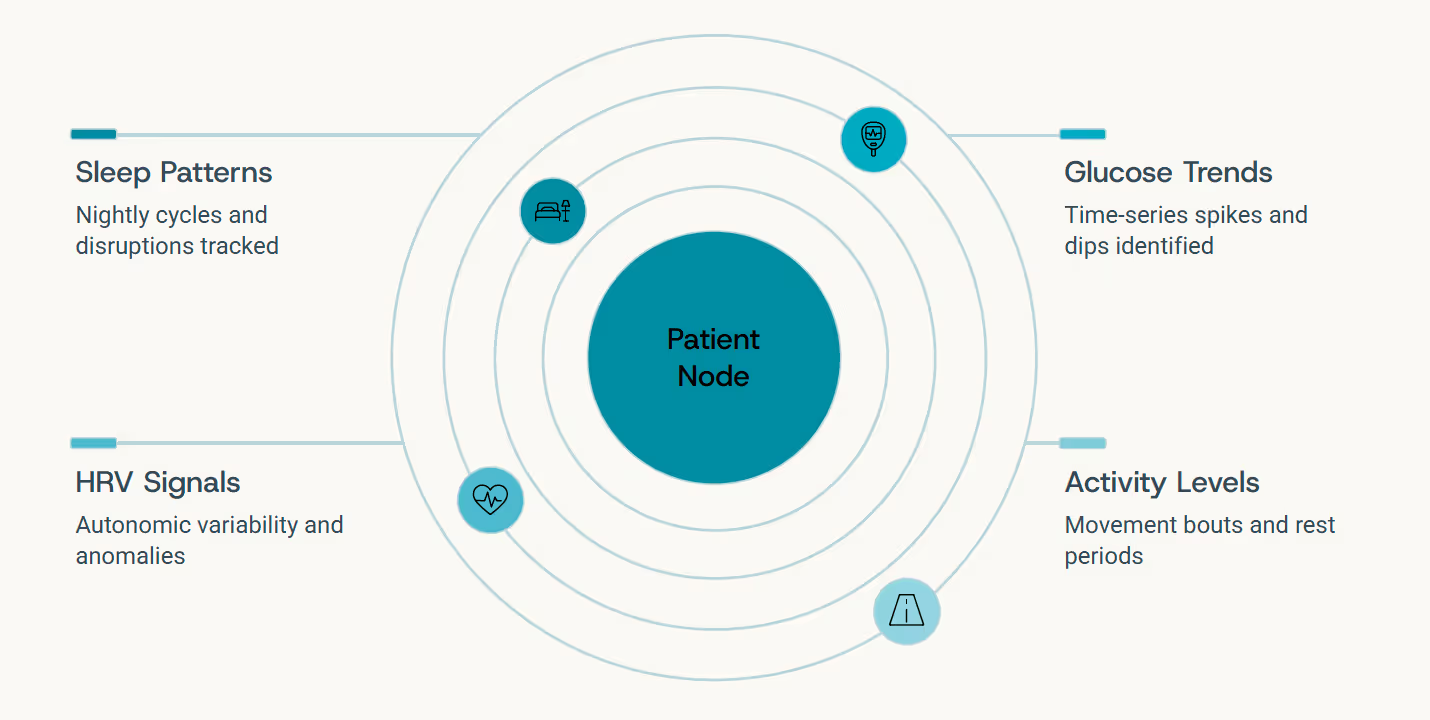

If medical imaging captures space, wearable biosensors capture time. Every heart rate variability measurement, glucose fluctuation, sleep cycle disruption, and activity pattern adds another edge in motion to the health graph - a continuously updated chronicle of how the body behaves moment by moment, day by day.

Graph LLMs can reason over these temporal signals dynamically, understanding patterns not as isolated time-points but as connected sequences - edges that evolve and interact across the graph. They transform raw time-series into causal interpretation, inferring statements like:

"Your recent sleep disruptions correlate with rising inflammatory markers and earlier glucose excursions - suggestive of evolving metabolic stress that warrants attention."

Context Engineering: Why Prompting & Tooling Matter

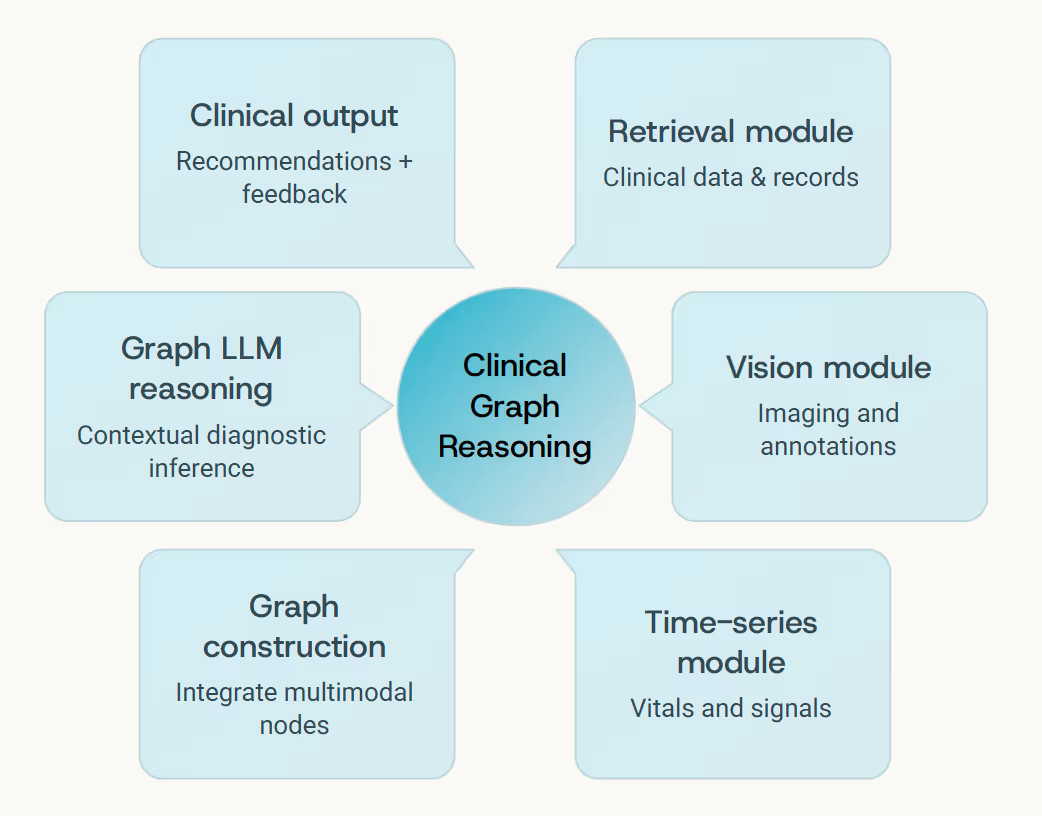

Building sophisticated medical AI systems isn't just about selecting the most advanced model - it's fundamentally about how you structure the context, design the tools, and orchestrate the feedback loops. This discipline, which we call context engineering, determines whether a Graph LLM becomes merely another data processor or a true clinical reasoning partner.

01 Retrieval-Augmented Pipelines

Fetch the latest research evidence, clinical guidelines, and case studies relevant to the current patient context

02 Modular Tool Design

Specialized components for imaging feature extraction, time-series encoding, lab result interpretation, and document retrieval

03 Graph Construction

Dynamic assembly of patient-specific knowledge graphs integrating all available data modalities and their relationships

04 Structured Reasoning

Graph LLM traverses the constructed network, generating traceable, evidence-grounded clinical explanations

05 Human Feedback Loops

Clinician validation reinforces correct reasoning pathways, similar to RLHF but grounded in verified medical judgment

In large-scale life-sciences workflows, the difference between applying a flat LLM and deploying a Graph LLM often comes down to context engineering: which tools get invoked when, how graphs are constructed and updated, how reasoning paths are validated against clinical knowledge, and how the system learns from expert feedback over time.

Context is Architecture: The prompts, persona conditioning, tool orchestration, and feedback mechanisms you design are as critical as the foundation model itself. Context engineering transforms capability into clinical utility.

Agents in the Lab: Life-Sciences Systems That Raise the Bar

Recent developments illustrate how all these components - multimodal reasoning, graph structures, context engineering, and reinforcement learning - converge in practice. Advanced biomedical AI agents now combine these capabilities to support clinical interpretation, accelerate discovery, and streamline operational workflows in ways that weren't possible just months ago.

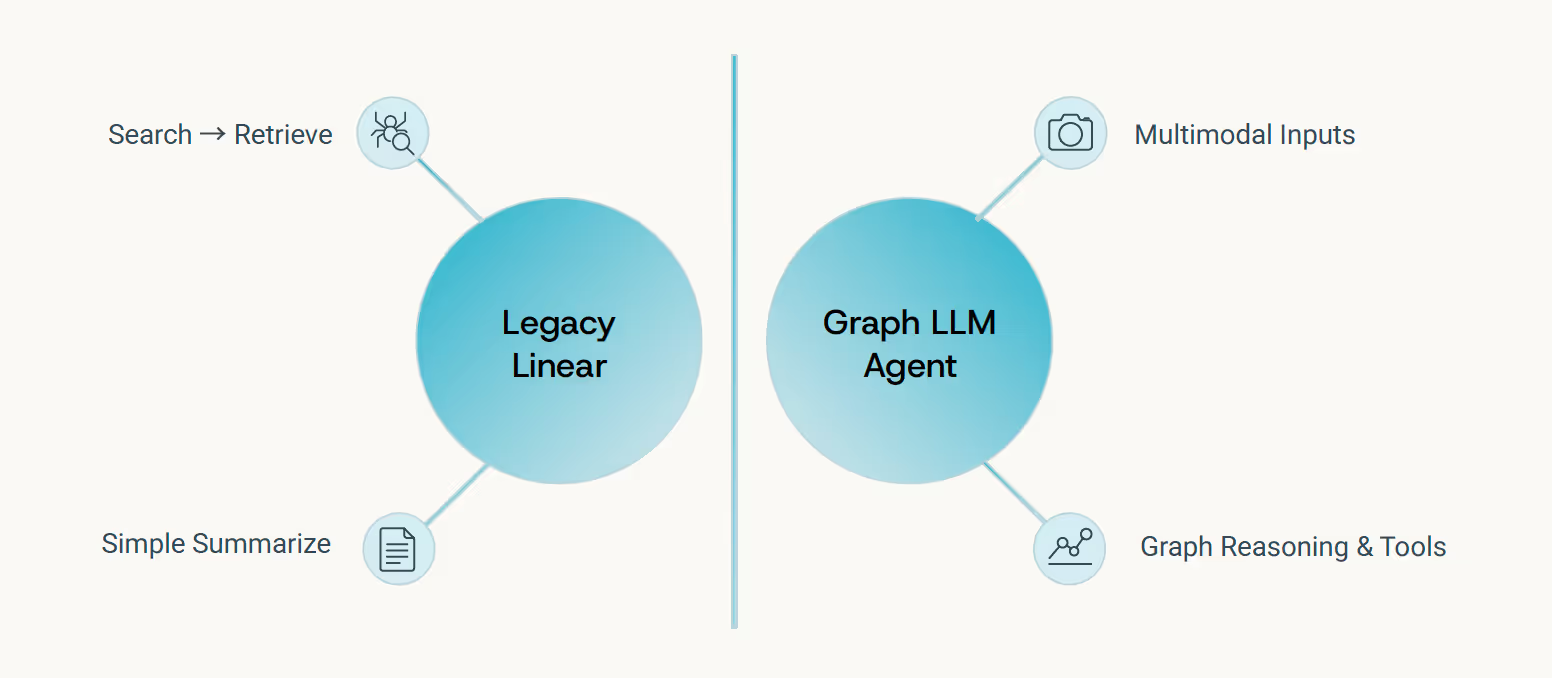

Beyond Search and Summarize

What distinguishes modern life-sciences agents from earlier evidence platforms - which primarily offered "search and summarize" functionality - is their ability to perform genuine multimodal reasoning. They don't just retrieve and condense; they connect, infer, and explain.

These systems build dynamic, patient-specific graphs rather than conducting static database lookups. They embed reasoning chains that extend beyond retrieval to genuine inference and mechanistic explanation. For example, a modern agent can analyze a patient's genetic profile, imaging scans, and electronic health records to identify subtle disease patterns, predict treatment response, and even suggest novel therapeutic avenues - capabilities far beyond simply finding relevant articles. They adapt to user personas, workflow contexts, and incorporate feedback to improve over time, transforming raw data into actionable insights for clinicians and researchers.

In practice, these agents go far beyond basic information retrieval. They operate as sophisticated reasoning engines capable of navigating vast, heterogeneous data landscapes. They leverage tools to interrogate databases, execute computational biology simulations, and interpret complex data types like single-cell transcriptomics or proteomic profiles. Their internal "thought processes" are often made transparent through explainable AI techniques, allowing clinicians and researchers to understand the basis of their recommendations.

- Multimodal Integration - Reasoning across imaging, wearables, labs, and clinical text simultaneously

- Dynamic Graphs - Patient-specific knowledge structures that update with each new data point

- Reasoning Chains - Traceable inference paths from evidence to conclusion with cited sources

- Contextual Adaptation - Learning user preferences, workflow needs, and communication styles

This represents a fundamental leap in capability - from systems that help you find information to systems that help you understand it. The architecture weaves together the complete chain: data ingestion, tool orchestration, graph reasoning, contextual explanation, and continuous learning from expert feedback.

Ultimately, these advanced agents are not just processing data; they are actively participating in the scientific and clinical process. By intelligently combining diverse data sources, applying sophisticated reasoning, and learning from human interaction, they are poised to revolutionize how we approach complex challenges in life sciences and healthcare, moving us closer to truly personalized and predictive medicine.

Reasoning With Reinforcement: Aligning AI With Clinical Judgment

Medical reasoning isn't only about processing data -- it's about exercising judgment under uncertainty. Foundation models learn from statistical patterns in training data, but physicians reason from evidence, experience, and an understanding of what they don't know. This demands a new form of reinforcement: not just learning from human feedback (RLHF), but learning from verified reasoning (RLVR).

Recent breakthrough work by Zhang et al. (2025) demonstrates that RLVR-trained medical models achieve reasoning accuracy comparable to extensively supervised fine-tuned systems, while offering substantially improved generalization to novel clinical scenarios and enhanced traceability of inference steps.

Complementary research by Lin et al. (2025) shows how combining domain-specific knowledge injection with reinforcement loops reduces hallucinations and increases robustness when models encounter edge cases or ambiguous presentations - precisely where clinical judgment matters most.

Verified Reasoning Paths

Each inference step validated against established medical knowledge and clinical guidelines

Human Expert Feedback

Clinician review refines context templates, tool invocations, and reasoning strategies

Auditable Decisions

Complete reasoning trails logged and available for review, learning, and quality assurance

In the context of Graph LLMs combined with multimodal workflows and context engineering, these reinforcement loops become integral to system architecture: human feedback continuously refines how contexts are constructed, which tools get invoked when, and how reasoning paths traverse the knowledge graph. The result is AI that reasons with structure and citations, not just statistical confidence.

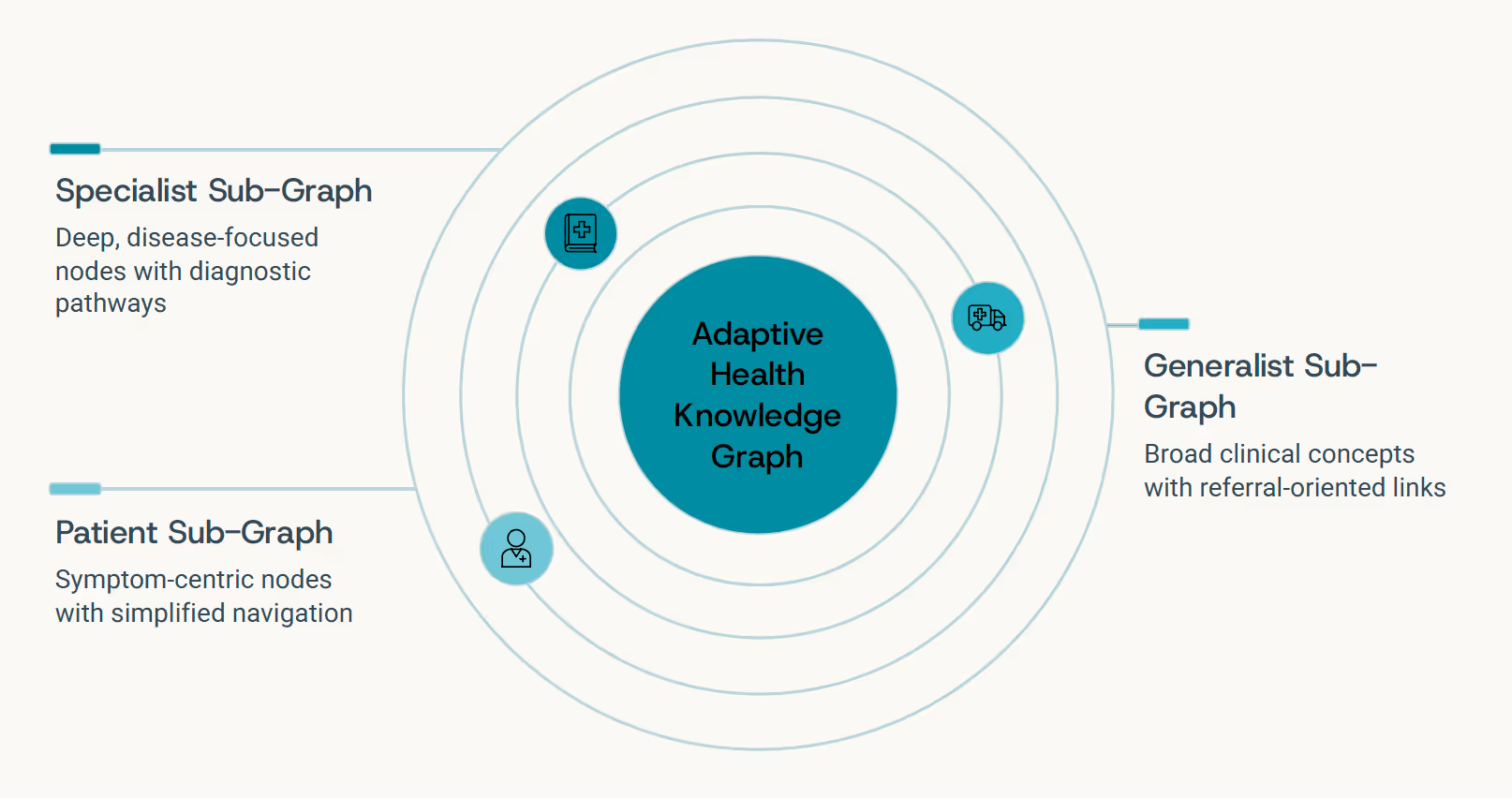

Personalization: Graphs That Adapt to People

No two clinicians think alike - and no two patients experience health the same way. The next evolution of medical Graph LLMs lies in adaptive reasoning: systems that learn not just medical knowledge, but how you think, communicate, and prioritize information. This is personalization at the level of cognitive style, not just content filtering.

The Specialist Perspective

Receives in-depth mechanistic explanations with molecular pathways, detailed citations to primary literature, and nuanced discussion of alternative hypotheses

The Generalist View

Gets high-level summaries focused on differential diagnosis, actionable next steps, and clear decision points with supporting evidence

The Patient Experience

Accesses clear, jargon-free explanations emphasizing lifestyle implications, treatment options in plain language, and what to expect

Pioneering work by Sun et al. (2024) on "Knowledge Graph Tuning" demonstrates how models can personalize through interaction history - extracting user-specific knowledge triples that capture individual reasoning patterns. Wu et al. (2024) showed real-time persona alignment that adapts communication style on the fly. Ma et al. (2025) extended this to clinical dialogue systems that prime context based on accumulated user preferences.

"Each user's perspective is a sub-graph of the complete medical knowledge network. Adaptive systems learn to traverse that graph differently for different people - adjusting depth, terminology, and narrative style to match how you think."

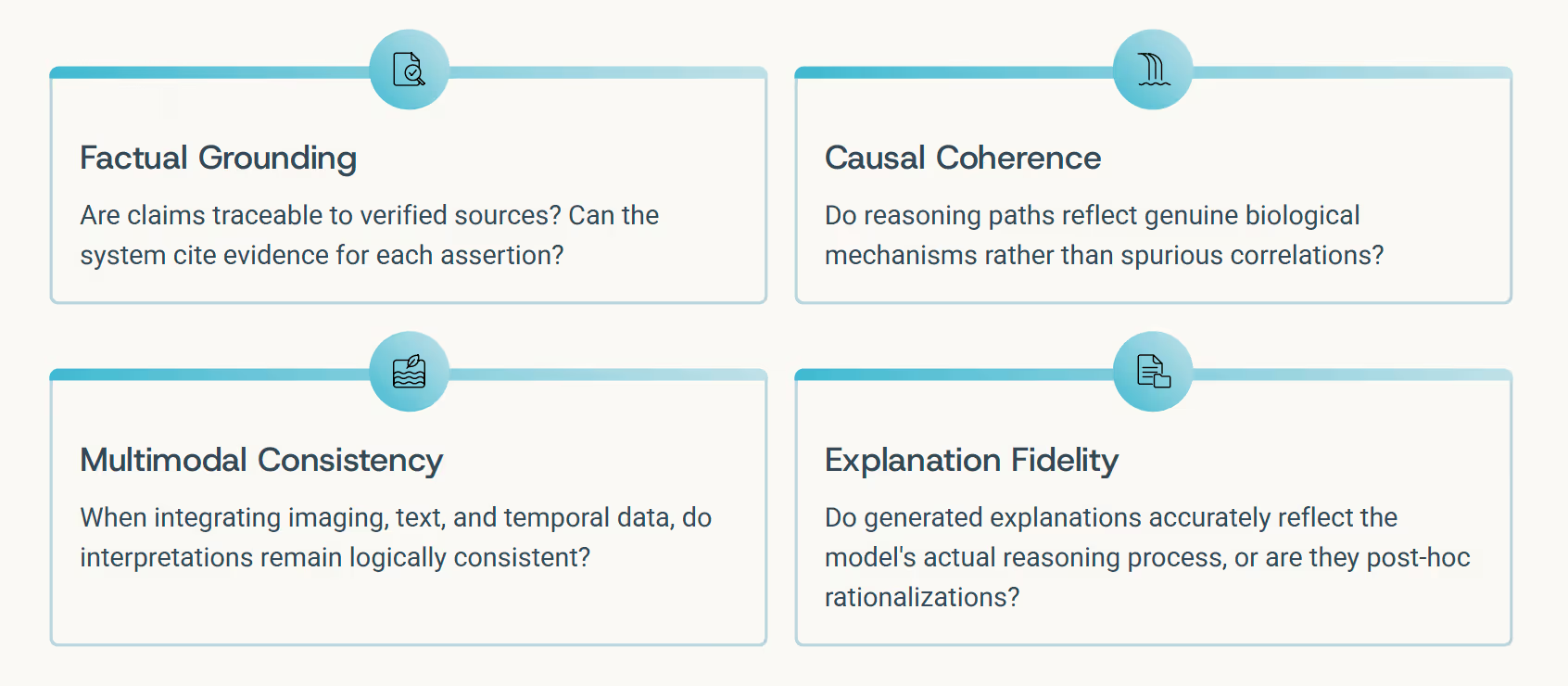

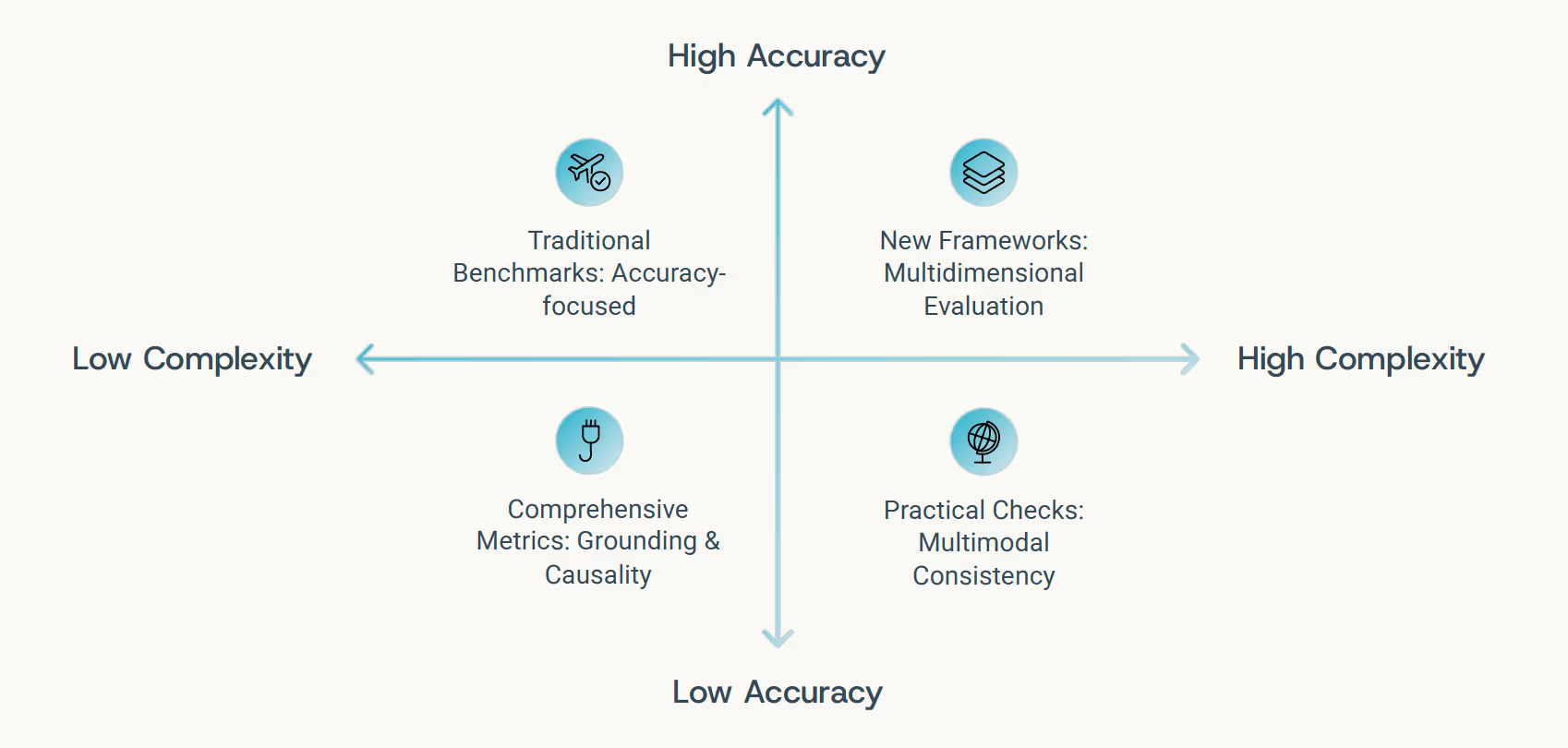

Evaluating the New Intelligence

As medical AI systems evolve to encompass multimodal reasoning, temporal dynamics, graph structures, and agentic workflows, conventional evaluation benchmarks become insufficient. Accuracy alone doesn't capture whether a system reasons responsibly. We need frameworks that assess not just what models conclude, but how they reach those conclusions.

Emerging Evaluation Frameworks

- MultiMedEval (Royer et al., 2024): Comprehensive benchmarking across multiple medical reasoning tasks and modalities

- MEDIC (Kanithi et al., 2024): Evaluates grounded reasoning with explicit evidence linking requirements

- MedCheck (Ma et al., 2025): Assesses causal and factual coherence in clinical language models

The Evaluation Paradigm Shift:

From "Did the AI get it right?" to "Did it reason transparently, responsibly, and with appropriate epistemic humility about what it knows and doesn't know?"

Toward the Living Graph of You

Every human life can be represented as a vast, continuously evolving graph - an intricate web connecting molecular signals, physiological measurements, behavioral patterns, environmental exposures, and lived experiences across the dimension of time. Until now, no system could truly reason across that complexity at scale. That changes today.

Graph LLMs, fused with modality-specific foundation models and embedded within expertly context-engineered workflows, represent the first AI systems genuinely capable of reasoning across this multidimensional complexity. They can read your health record like a narrative with themes and turning points, interpret your medical scans as structural evidence connected to function, monitor your wearable data as real-time physiological feedback loops - and weave all of these threads into a coherent, evolving understanding of you.

This is not artificial intelligence as an oracle dispensing answers from on high, but as an organism of understanding: a system that learns continuously, adapts to context, and reasons through connections rather than in isolation.

In time, such systems will not only help doctors make better decisions - they'll help medicine rediscover its essential nature: the science of connections. Because health isn't a collection of data points. It's a story. And for the first time, we have AI that can read it as one.

References

- Chandak, P. et al. (2023). Building a Knowledge Graph to Enable Precision Medicine (PrimeKG). Nature Scientific Data, 10, 79.

- Santos, A. et al. (2022). A Knowledge Graph to Interpret Clinical Proteomics Data. Nature Biotechnology, 40, 695–704.

- Chan, Y.H. et al. (2024). Graph Neural Networks in Brain Connectivity Studies. Frontiers in Neuroscience, 18:1176385.

- Duroux, D. et al. (2023). Graph-Based Multi-Modality Integration for Prediction of Cancer Subtypes. Scientific Reports, 13, 18562.

- Phillips, N.E. et al. (2023). Uncovering Personalized Glucose Responses and Circadian Rhythms Using Wearables. Cell Reports Methods, 3(7):100521.

- Nye, L. et al. (2023). Continuous-Time Neural Networks for Predicting Health Trajectories. arXiv preprint arXiv:2307.04772.

- Sarani Rad, F. et al. (2024). Personalized Diabetes Management with Digital Twins. JMIR Medical Informatics, 12(3):e56471.

- Zhang, S. et al. (2025). Med-RLVR: Emerging Medical Reasoning from a 3B Base Model via Reinforcement Learning. arXiv preprint arXiv:2502.19655.

.svg)

.png)